Nvidia’s Hidden Growth Power the Market Is Missing

21:00 August 6, 2025 EDT

Key Points:

NVIDIA is scheduled to report its fiscal Q2 2026 earnings on August 27. While investors remain focused on the company’s data center performance, an equally critical segment is often overlooked by the market.

In fiscal year 2025, revenue from NVIDIA’s networking business surpassed its long-standing second-largest revenue stream—gaming.

In building AI supercomputers, networking infrastructure plays an irreplaceable role, especially in connecting large-scale GPU clusters, optimizing data flow, and reducing latency.

NVIDIA is set to announce its fiscal Q2 2026 earnings on August 27, 2025. Investor attention continues to center on the company’s data center segment, which serves as the primary revenue channel for its high-performance AI processors.

However, the data center business extends far beyond chip sales. Networking—an essential but frequently overlooked component—is rapidly emerging as a critical growth driver. This segment already possesses a clearly defined growth trajectory and strategic importance, yet its future contribution remains underestimated by the broader market.

Networking Segment: Growth Trajectory

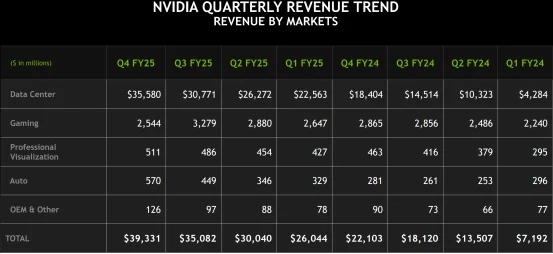

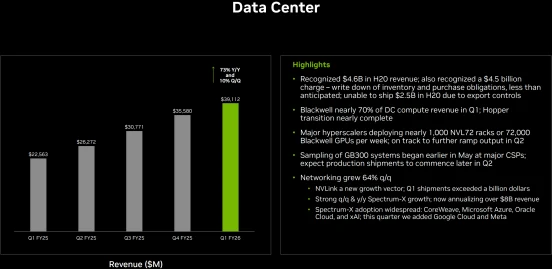

It is widely recognized that NVIDIA’s data center segment is its most important revenue driver. In fiscal year 2025, the company’s data center revenue reached $115.2 billion, with $102.1 billion generated from GPU sales and $12.9 billion from networking products. Notably, revenue from networking has now surpassed NVIDIA’s traditional second-largest business line—gaming, which brought in $11.4 billion during the same period.

Source: NVIDIA

In the first quarter of fiscal year 2026, NVIDIA’s data center revenue totaled $39.1 billion, with networking contributing $4.9 billion, accounting for 12.5% of the segment.

Source: NVIDIA

While networking still represents a relatively small portion of overall revenue, it is growing at a rapid pace and plays a direct role in enabling efficient AI system performance.

At the heart of networking’s value is its ability to synchronize thousands of GPUs, allowing them to function as a single unified compute engine. Without high-throughput and low-latency data transfer, system bottlenecks can occur—undermining the overall performance of a data center. As AI models become increasingly complex, especially with the rising demand for inference workloads, the need for high-performance networking infrastructure is becoming urgent. Inference, which involves running pre-trained models to generate real-time results, relies heavily on fast, reliable data movement—making network performance critical to overall system efficiency.

NVIDIA executives have emphasized that in building AI supercomputers, networking infrastructure is absolutely essential—particularly for connecting massive GPU clusters, optimizing data flows, and minimizing latency. Without a robust networking backbone, even the most powerful AI chips cannot reach their full performance potential.

Core Products

Currently, NVIDIA’s networking business spans three major technology pillars: InfiniBand, Ethernet, and NVLink. The company delivers end-to-end solutions across this stack—including network interface cards (NICs), switches, data processing units (DPUs), and software frameworks—forming the backbone of AI data center communication infrastructure.

NVLink enables high-speed communication either within a server’s GPUs or across servers, enhancing compute efficiency. InfiniBand connects multiple server nodes to form large-scale AI compute clusters, while Ethernet supports front-end networking for storage and system management. Together, these technologies ensure low-latency, high-throughput data transfer essential for AI workloads. Key products include the BlueField-3 DPU, NVIDIA Quantum platform, Spectrum series switches, and ConnectX-7 network interface cards.

Source: NVIDIA

In addition, NVIDIA offers several innovative technologies. The “What Just Happened” feature enables rapid network fault detection. The Morpheus AI-based security framework enhances system protection. The Spectrum-X Ethernet platform, when combined with BlueField DPUs, delivers modern congestion control and adaptive routing—widely adopted in AI systems. The latest silicon photonics switches support scaling to millions of GPUs, powering the next generation of AI factories.

These products provide complete InfiniBand and Ethernet solutions across a wide range of deployment scenarios—from enterprise servers to high-performance supercomputers. Applications include cloud computing, enterprise IT, financial services, storage, big data analytics, machine learning, and telecommunications. Through software-defined and hardware-accelerated networking, NVIDIA reduces the computational burden of network management, allowing GPUs and CPUs to focus on core workloads.

Competition Is Taking Shape

In the data center Ethernet switch market, NVIDIA achieved $1.46 billion in sales during the first quarter of 2025, capturing a 12.5% market share and establishing itself as a significant player. Its growth momentum has outpaced Arista Networks, and NVIDIA is steadily closing the gap with industry leader Cisco in Ethernet switch revenue.

According to the first-quarter 2025 report from market research firm Dell’Oro Group, demand for NVIDIA’s Ethernet switch products has been driven strongly by AI workloads, especially in the high-performance 400GbE and 800GbE switch segments. By integrating its GPU, DPU, and networking technologies, NVIDIA offers an end-to-end solution optimized for AI, gaining a competitive edge in AI-driven data center markets.

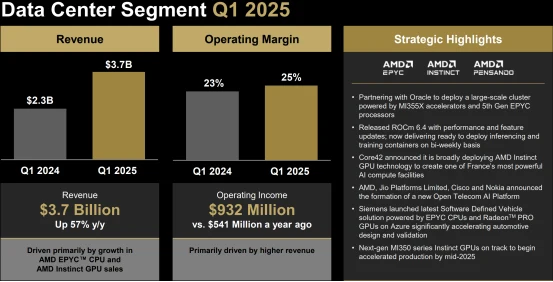

However, NVIDIA faces mounting pressure from multiple competitors. AMD, through its Instinct GPU series and associated networking solutions, reported approximately 57% year-over-year revenue growth in the data center market during Q1 2025, actively capturing AI-related market share.

Source: AMD

Meanwhile, cloud giants such as Amazon, Google, and Microsoft are accelerating development of proprietary AI chips and networking technologies to reduce reliance on NVIDIA. Additionally, the industry consortium behind the Ultra Accelerator Link (UALink) standard—supported by AMD, Intel, and Broadcom—aims to provide an open networking protocol for high-performance computing, directly challenging NVIDIA’s NVLink technology.

Currently, the AI industry is increasingly focusing on inference workloads, which impose higher demands on computing and networking infrastructure. While inference was initially considered less resource-intensive than training, recent trends indicate that advanced inference tasks, such as autonomous agent workflows, approach training-level complexity. This shift increases demand for high-performance networking to ensure low-latency data transfer and system efficiency.

Despite these challenges, NVIDIA’s networking solutions remain well-positioned within this evolving landscape, with competitors yet to pose a substantial threat to its market leadership.

Investment Value

NVIDIA’s networking business is a critical pillar of its AI data center strategy, ensuring efficient integration of its GPU products. The $12.9 billion revenue contribution in fiscal 2025, coupled with rapid growth, underscores the segment’s increasing significance within the company’s overall revenue. As AI adoption accelerates across industries, demand for high-performance networking solutions will continue to rise, especially in scalable and efficient data center environments.

Although AMD and the UALink consortium are making inroads into this market, they currently lack the comprehensive and highly integrated hardware-software ecosystem that NVIDIA offers. NVIDIA’s investments in silicon photonics, DPU programming, and security isolation have established significant technological barriers that are difficult to replicate in the short term.

Source: TradingView

In the upcoming earnings report on August 27, 2025, the contribution of the networking segment to data center revenue will be a key focus for investors. Continued growth in this business could signal NVIDIA’s ability to further expand its market share in the AI infrastructure sector.

Disclaimer: The content of this article does not constitute a recommendation or investment advice for any financial products.

Email Subscription

Subscribe to our email service to receive the latest updates