Broadcom’s AI Bet

02:40 October 15, 2025 EDT

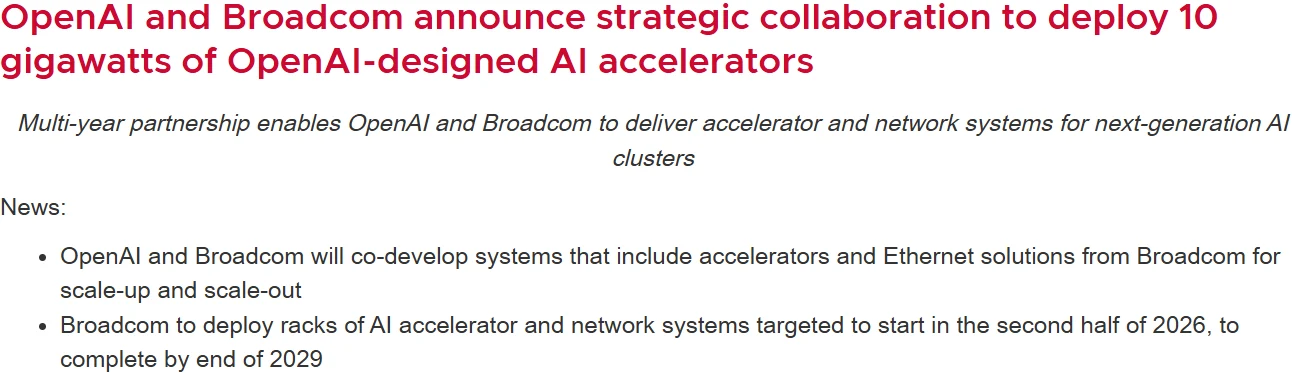

On October 13, 2025, local time, Broadcom and OpenAI jointly announced a strategic partnership to co-develop a 10GW custom AI accelerator. The announcement drove Broadcom’s stock up 9.88% on the day, lifting its market capitalization to $1.68 trillion, an intraday increase of over $150 billion.

Source: TradingView

At the same time, Broadcom unveiled the industry’s first Wi-Fi 8 chip solution and a new networking chip, “Thor Ultra,” aimed at further consolidating its control over AI computing networks.

The collaboration with OpenAI on compute capacity, coupled with the launch of the new networking chip, has been interpreted by the market as Broadcom directly challenging industry leader NVIDIA in the AI data center space. These initiatives may signal that Broadcom is integrating AI technology into its core operations to solidify its transformation from a traditional semiconductor giant to a key player in AI infrastructure.

Positioning in the AI Customization Market

The partnership between OpenAI and Broadcom marks a significant shift in the AI infrastructure sector. Under the agreement, the two companies will jointly develop and deploy a 10GW custom AI accelerator, a capacity comparable to the electricity grid of some small countries.

The project is scheduled to commence in the second half of 2026 and is expected to be completed by the end of 2029, aligning with OpenAI’s anticipated explosive growth in compute demand.

Source: Broadcom

Under the terms of the collaboration, OpenAI will lead the design of the AI accelerator and system architecture, while Broadcom will handle joint development and deployment. This division leverages OpenAI’s expertise in AI model algorithms and Broadcom’s technical capabilities in semiconductor design and system integration.

Charlie Kawwas, President of Broadcom’s Semiconductor Solutions Group, commented, “Combining custom AI accelerators with Ethernet solutions will optimize the next generation of AI infrastructure in both performance and cost.”

From a strategic perspective, this partnership represents a key component of OpenAI’s diversified supply chain strategy. OpenAI is currently advancing a 20GW compute expansion plan, representing an investment exceeding $1 trillion, while its projected 2025 revenue is only $13 billion, with profitability not expected until 2029, highlighting a significant funding gap.

For Broadcom, the collaboration secures a high-value client. Bernstein Research analyst Stacy Rasgon estimates that the deal could generate over $100 billion in additional revenue for Broadcom over the next three to four years, exceeding 2.5 times Broadcom’s projected fiscal 2025 revenue.

The partnership builds on an existing agreement between the two companies for joint AI accelerator development and supply. Notably, unlike prior deals between OpenAI and NVIDIA or AMD, this collaboration does not involve direct investment or subsidies.

However, the non-general-purpose nature of the custom chips results in extremely low asset liquidity. If OpenAI’s funding falters or demand falls short of expectations, Broadcom may find it difficult to recoup its upfront R&D costs. Broadcom CEO Hock Tan also acknowledged that while developing large-scale AI systems can boost profitability, it will dilute gross margins, though the exact extent of dilution was not disclosed.

Technology Positioning

Beyond its collaboration with OpenAI, Broadcom has also strengthened its footprint in AI networking technologies. On October 14, 2025, the company announced the launch of a new network chip, Thor Ultra, capable of supporting connections for hundreds of thousands of chips and providing the underlying network infrastructure for AI applications such as ChatGPT.

As the industry’s first 800G AI Ethernet NIC, Thor Ultra complies with the Open Compute Project’s Open Ethernet standards and introduces a series of breakthrough RDMA innovations. These include packet-level multipathing, direct delivery of out-of-order packets to XPU memory, selective retransmission, and programmable congestion control algorithms.

Source: Broadcom

Simultaneously, Broadcom unveiled the industry’s first Wi-Fi 8 chip solutions, including BCM6718, BCM43840, BCM43820, and BCM43109, targeting home and carrier APs, enterprise APs, as well as edge devices such as smartphones and laptops.

Broadcom will also open its Wi-Fi 8 intellectual property for licensing, enabling IoT, automotive, and mobile device manufacturers to quickly adopt AI-prioritized wireless connectivity. These technology deployments complement Broadcom’s AI accelerator business, allowing the company to offer a comprehensive AI compute solution spanning from data centers to edge devices.

From Traditional Semiconductor Company to AI Infrastructure Leader

Although NVIDIA currently dominates the AI hardware sector, controlling roughly 80–90% of the data center AI accelerator market, Broadcom has carved out a differentiated competitive path by focusing on customized chips.

It is important to note that Broadcom is not directly competing with NVIDIA’s off-the-shelf GPUs. Instead, the company has chosen ASICs (Application-Specific Integrated Circuits) as its core AI chip strategy and partners with cloud giants to develop dedicated chips. This approach means Broadcom’s growth does not necessarily come at NVIDIA’s expense, as the overall AI compute market continues to expand rapidly.

From a technical perspective, ASICs are optimized for fixed inference workloads, offering over 50% higher energy efficiency and 30% lower cost per unit of compute compared with GPUs, making them well-suited for large-scale inference demands from cloud providers like Meta and Google. GPUs, by contrast, are better suited for training workloads with frequently changing parameters.

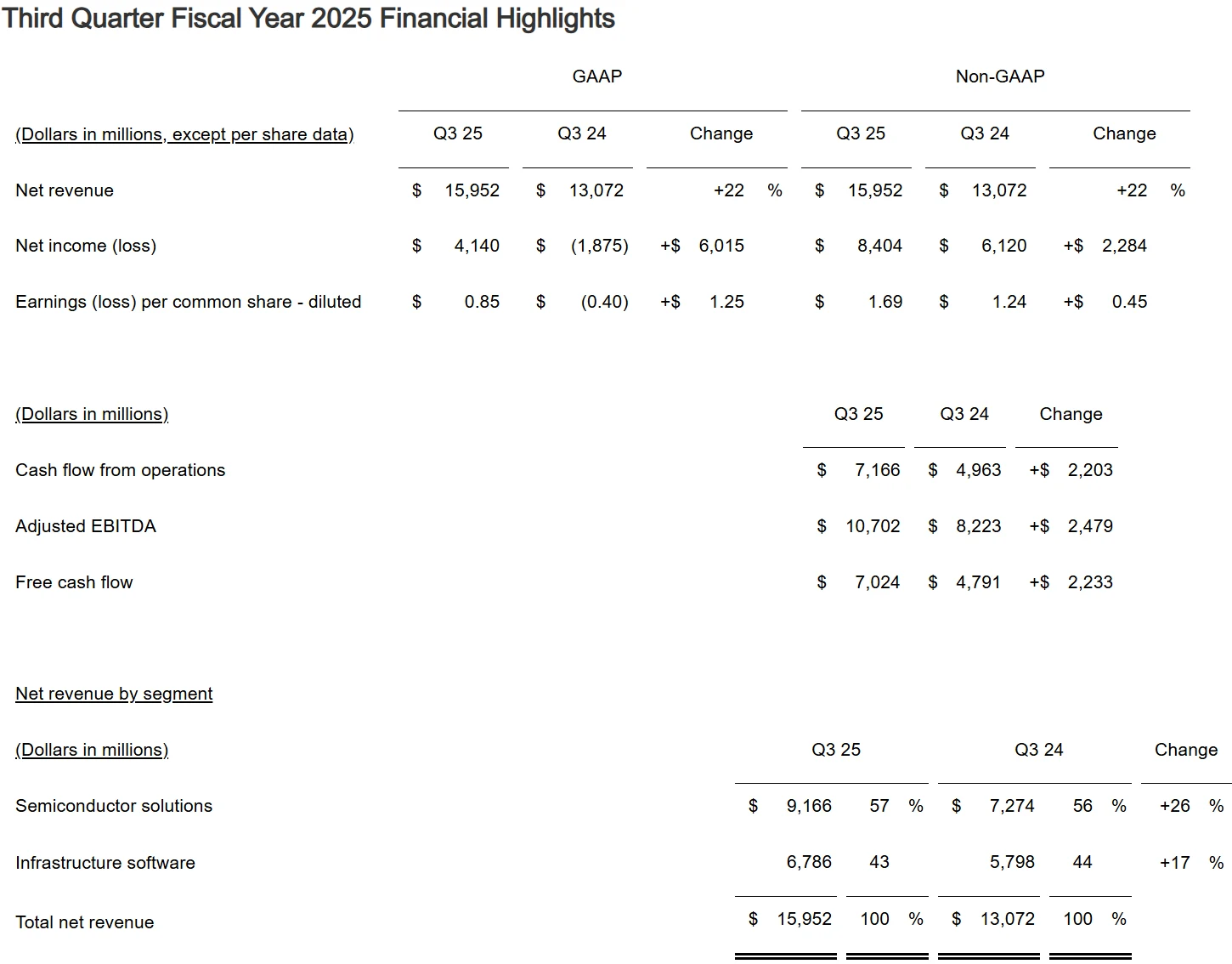

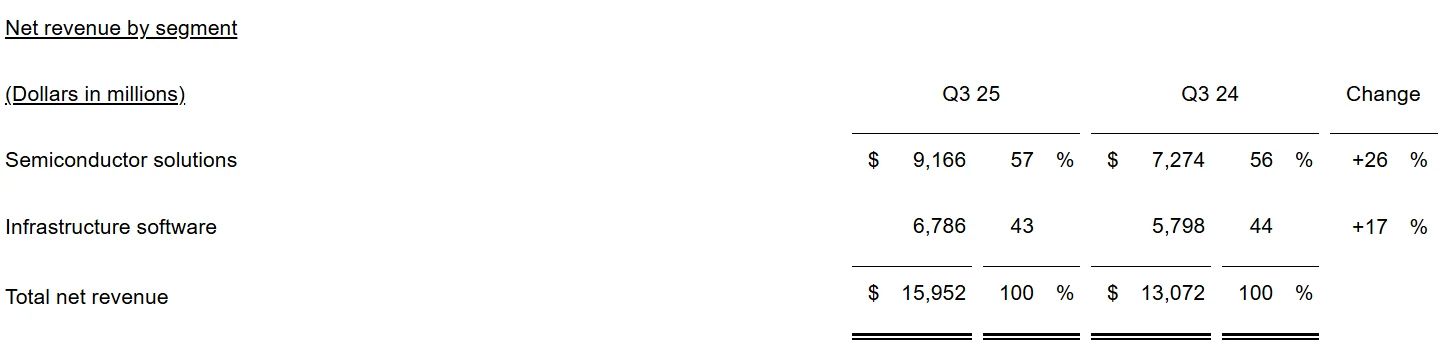

Broadcom’s AI investments have already begun to show tangible results. In Q3 of fiscal 2025, the company reported revenues of $15.95 billion, up 22% year-over-year, setting a new record.

Source: Broadcom

Within this, AI-related revenues jumped 63% quarter-over-quarter to $5.2 billion, marking 11 consecutive quarters of growth. The company expects AI semiconductor sales to further rise to $6.2 billion in Q4. Notably, the custom AI accelerator (XPU) business accounts for 65% of AI revenue, while the infrastructure software division, which includes VMware, contributed $6.79 billion in Q3, up 43% YoY, with 17% organic growth.

Source: Broadcom

Market data indicates that the ASIC segment is rapidly expanding: the global AI ASIC market reached $12 billion in 2024, with Morgan Stanley projecting $30 billion by 2027 (CAGR 34%). Broadcom has already made significant strides in the custom chip arena, including its collaboration with Google on custom TPU AI chips. HSBC analysts forecast that by 2026, Broadcom’s custom chip business will grow at a rate well above NVIDIA’s GPU business.

Broadcom is also engaging in direct competition with NVIDIA in AI networking chips. The Thor Ultra chip is positioned to rival NVIDIA’s network interface chips, aiming to further consolidate Broadcom’s control over data center internal networking for AI applications.

The Surge and Speculative Risks in AI Compute Investments

The collaboration between OpenAI and Broadcom represents just one example of the recent surge in AI compute investments. Over the past month, OpenAI has announced several multibillion-dollar deals, securing up to 26 GW of compute capacity with NVIDIA, AMD, and Broadcom.

Citigroup analysts estimate that for every additional 1 GW of compute capacity, OpenAI needs to invest approximately $50 billion in infrastructure. Based on this, OpenAI’s latest partnerships with chipmakers are expected to result in cumulative capital expenditures of around $1.3 trillion by 2030.

However, such massive investments have raised market concerns about a potential AI bubble. The current AI investment frenzy has drawn comparisons to the dot-com bubble more than two decades ago.

At the turn of the century, investors poured capital into emerging internet companies, believing that the internet would transform every business model. This led to valuations for companies without proven revenue or profitability that were far out of line with fundamentals. Between 1995 and 2000, the Nasdaq Technology Index surged nearly fivefold, while the combined market capitalization of internet companies reached roughly $4.5 trillion, despite total revenues of only $21 billion and cumulative losses of $62 billion—a stark disconnect between profitability and valuation.

Source: TradingView

OpenAI’s own financials are also drawing scrutiny. While the company’s annualized revenue in H1 2025 already exceeded $10 billion, and full-year revenue is expected to more than double to $12.7 billion, its net loss in 2024 totaled $4 billion, with projections suggesting this could rise to $8 billion in 2025.

To maintain its lead in the AI arms race, OpenAI has pledged over $115 billion in capital spending over the next five years, primarily for building its own data centers and other compute infrastructure.

This underscores that, while AI compute investments are driving rapid growth across the semiconductor and cloud infrastructure markets, significant uncertainty remains. OpenAI’s high-intensity capital expenditures and substantial losses serve as a reminder that if actual AI compute demand falls short of expectations, it could have a direct impact on industry dynamics and individual company performance in the coming years.

Conclusion

Broadcom’s strong rise in the AI sector represents a significant transformation for the semiconductor industry. As AI applications expand from training to inference scenarios, the market for ASIC chips led by Broadcom is expected to continue growing. Looking ahead, the AI chip market may no longer be dominated solely by NVIDIA, but instead evolve into a diversified landscape with long-term coexistence of GPUs and ASICs.

Disclaimer: The content of this article does not constitute a recommendation or investment advice for any financial products.

Email Subscription

Subscribe to our email service to receive the latest updates