OpenAI to Become the Next Trillion-Dollar Giant—Do You Believe Jensen Huang’s Prediction?

03:44 September 30, 2025 EDT

“OpenAI is highly likely to become the world’s next multi-trillion-dollar mega-cap company,” Nvidia CEO Jensen Huang declared in a recent podcast, making one of his boldest predictions to date. Yet his remarks come at a time when OpenAI CEO Sam Altman is warning that “too much capital is pouring into unproven AI ventures,” while Meta CEO Mark Zuckerberg has drawn parallels between today’s AI infrastructure frenzy and the excesses of past market bubbles.

Just days before Huang’s comments, Nvidia announced a landmark partnership with OpenAI: the company will leverage Nvidia’s systems to build and operate at least 10GW of AI data centers to train and run its next-generation models. As part of the deal, Nvidia also plans to invest up to $100 billion in OpenAI over time.

Jensen Huang’s Big Bet

While leading voices in the AI industry are sounding alarms over overheating, Jensen Huang is doubling down with extraordinary confidence in OpenAI. He argues that the company’s “dual exponential growth” could drive its valuation to rise faster than any technology firm in history — a view already supported by hard data.

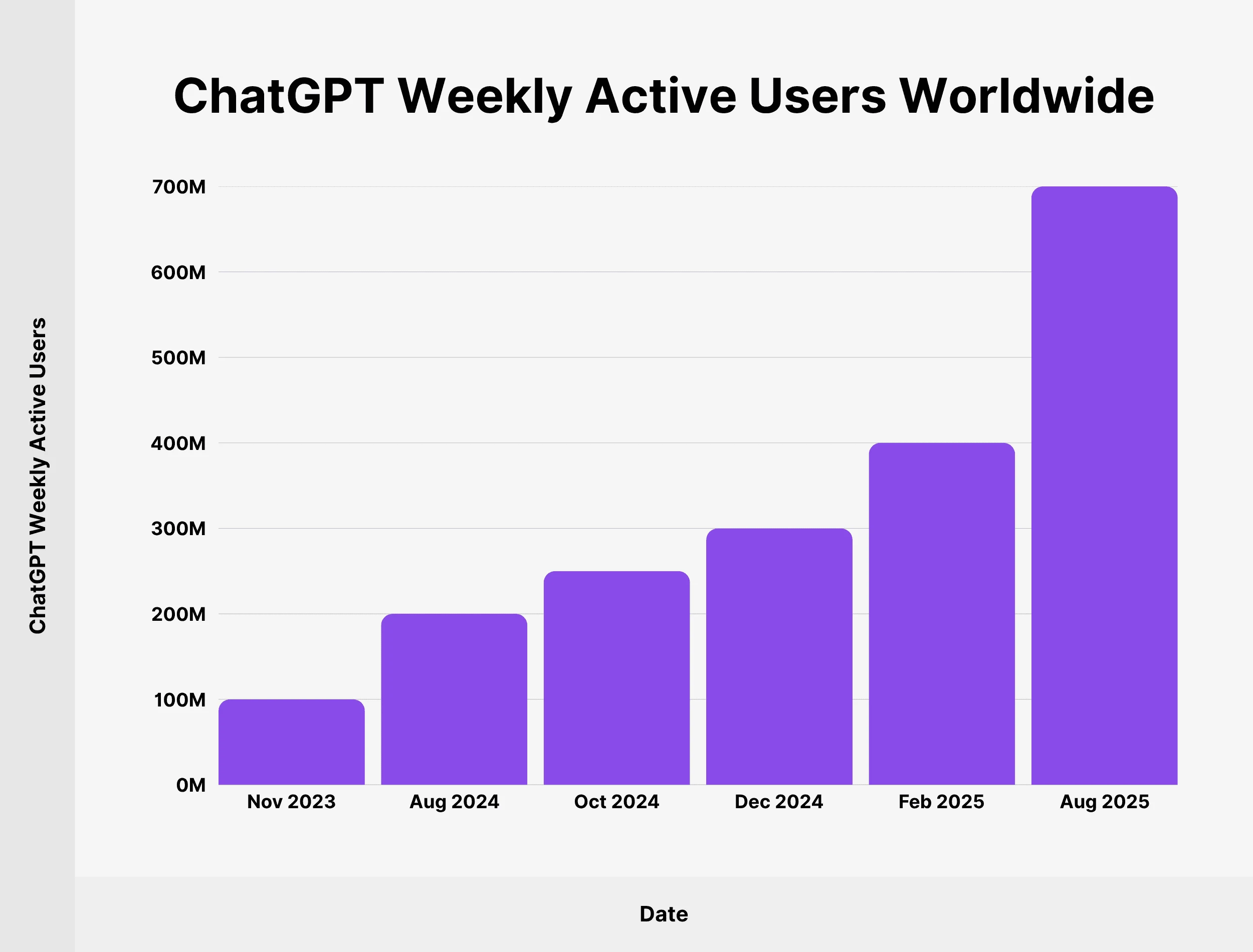

On the consumer and revenue fronts, OpenAI has built a meaningful commercial foundation. By 2025, its flagship product ChatGPT has reached 700 million weekly active users, with more than 20 million paid subscribers.

Source: Backlinko

Momentum on the enterprise side has been even stronger. By September 2024, ChatGPT Enterprise surpassed 3 million paying users across data-intensive industries such as finance, healthcare, and manufacturing. Premium offerings — including a “PhD-level research agent” priced at $20,000 per month — are projected to contribute 20% to 25% of total revenue over time.

Revenue growth has been equally striking. In the first half of 2025, OpenAI generated roughly $4.3 billion, already exceeding last year’s full-year figure by 16%. Full-year revenue is projected to hit $13 billion, with the company lifting its 2030 target to more than $200 billion — a level that would surpass the current annual revenue of Nvidia or Meta.

Demand for computing power is rising at an even steeper trajectory. OpenAI President Greg Brockman recently told media that, in terms of compute capacity, the industry remains “three orders of magnitude behind.” To achieve the vision of “always-on, always-working” AI, he estimated the world will ultimately require around 10 billion GPUs — a figure greater than the Earth’s current population of 8.2 billion.

In the near term, OpenAI’s joint project with Nvidia to build a 10GW AI data center will alone require 4 to 5 million GPUs, equivalent to Nvidia’s entire projected GPU shipments for 2025 and roughly double its 2024 output. Estimates put Nvidia’s 2025 GPU sales at 6.5 million to 7 million units, up from 3 million to 3.5 million in 2024.

Huang insists this demand surge is not a short-lived spike. By his calculation, delivering $10 trillion in incremental global GDP from AI will require $5 trillion in annual capital expenditure for compute infrastructure.

Yet despite its breakneck growth, OpenAI remains unprofitable, with losses expected to exceed $5 billion in 2025 as revenue still falls short of the capital costs of data center buildouts. Huang, however, frames this “cash burn” as the necessary price of technological leapfrogging — akin to Google’s early, sustained investments in search. In his view, the payoff will come once economies of scale and technical breakthroughs converge.

Core Logic

Jensen Huang emphasized that his confidence in OpenAI is not based on market hype, but stems from the “fundamental physics logic” of AI technological evolution. This logic is specifically reflected in the three expansion principles of “pre-training, post-training, inference,” with each stage exponentially amplifying compute demand, thereby driving the growth of computational resources across the entire industry.

In the pre-training phase, compute consumption has already become an industry consensus. For example, GPT-4’s training compute increased by more than two orders of magnitude compared to GPT-3, while the training requirements of next-generation models are expected to be even larger. Google’s Gemini Ultra 1.0 model requires training compute equivalent to 2.5 times that of GPT-4.

However, Huang believes the stage with the greatest explosive potential is not training, but inference. Inference is the bridge between AI and real-world applications, and its mode has evolved from “single-response” to “continuous reasoning.” Each chat interaction, video rendering, or algorithm adjustment generates significant compute consumption. As he stated, “The longer the thinking time, the higher the answer quality, and the more computing power is required.”

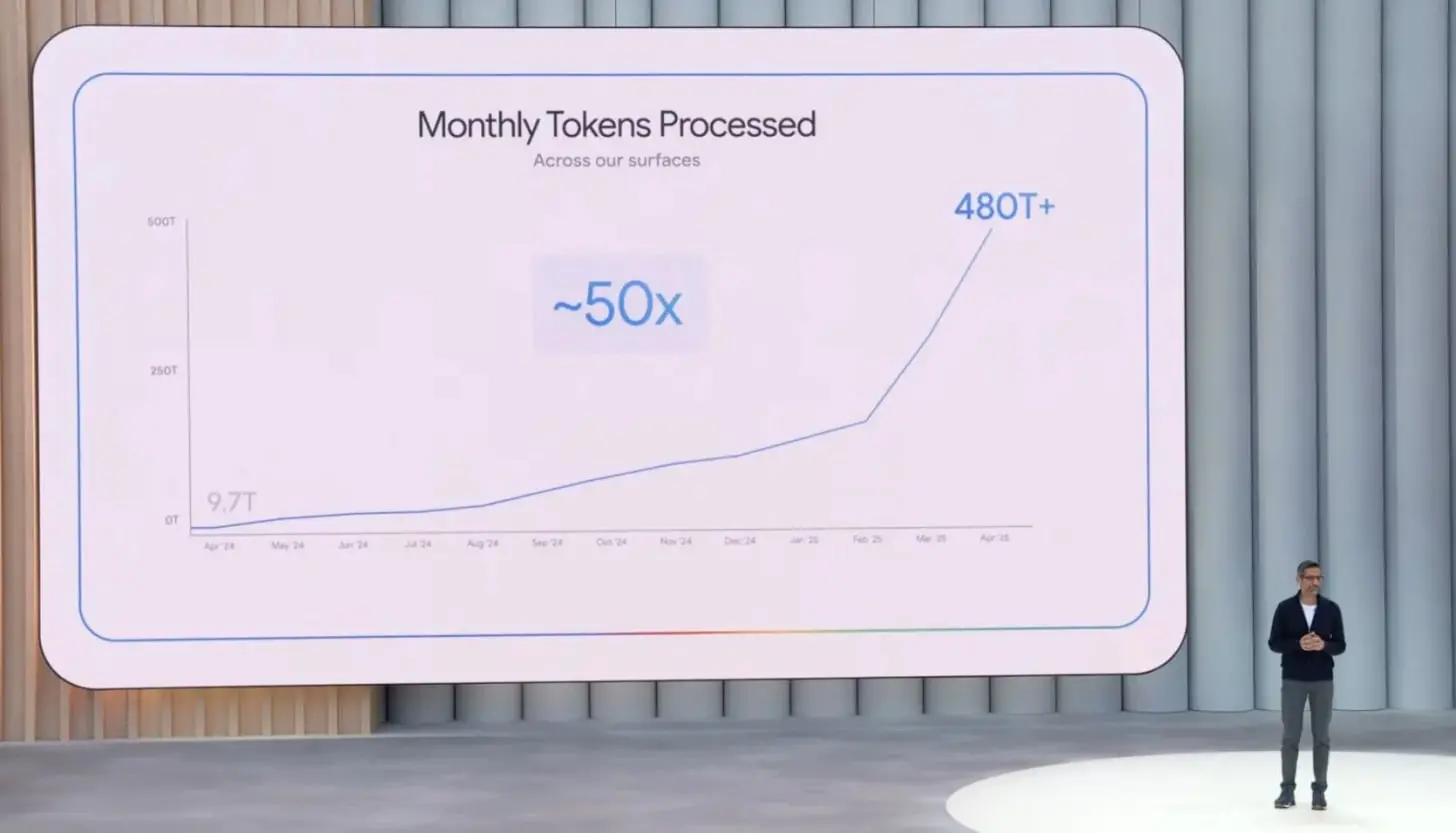

The compute demand for inference has already reached an inflection point. Google Tokens’ monthly usage surged from 9.7 trillion in April 2024 to 480 trillion in April 2025, nearly a 50-fold increase in one year; Microsoft Azure AI infrastructure processed over 100 trillion Tokens in Q1, a fivefold year-over-year increase, with 50 trillion in March alone. The Chinese domestic market is also showing explosive growth, with ByteDance’s Volcano Engine daily Token usage increasing 137-fold year-over-year.

Source: Interconnects

This trend is also confirmed by institutional forecasts. IDC expects the global AI inference computing market to reach $1.2 trillion by 2027, ten times that of 2023; Oracle founder Larry Ellison stated at the earnings call that the AI inference market will “far exceed” the training market, and current inference compute is already showing a “depletion” trend.

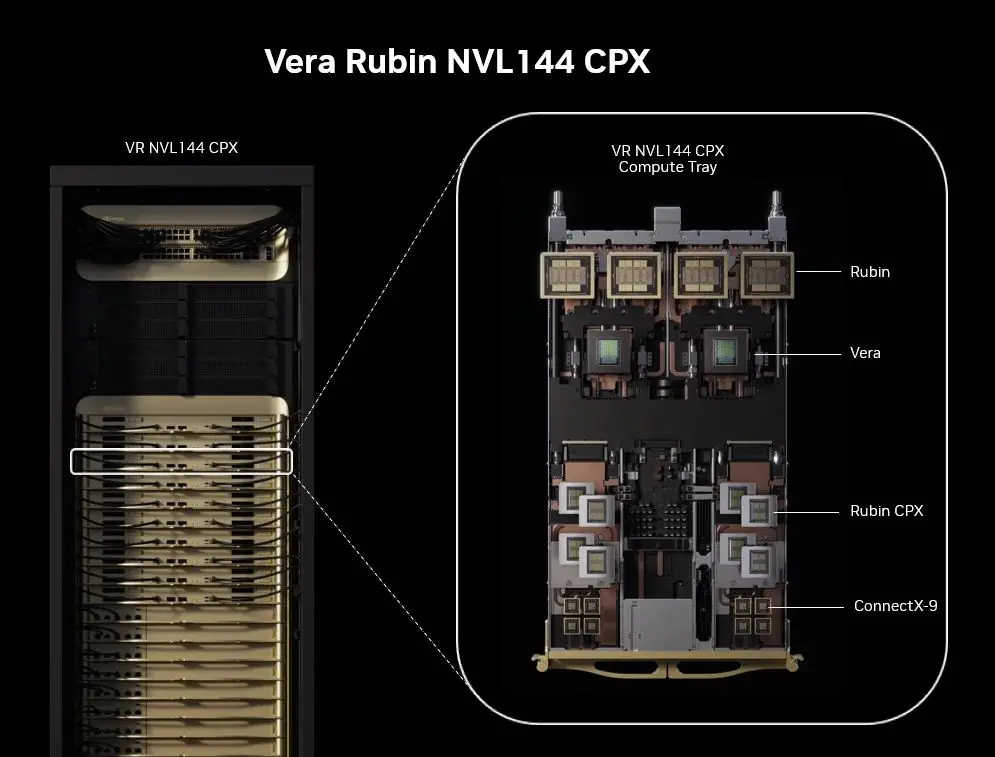

To seize the inference market, NVIDIA has launched the Rubin CPX GPU, specifically designed for long-context inference, with an investment return up to 50 times, far exceeding the 10 times level of traditional GPUs. This cycle of technological iteration and demand growth forms a positive feedback loop, which is the core basis of Huang’s point that “the era of general-purpose computing is ending, and the era of accelerated computing and AI is arriving.”

Source: NVIDIA

CICC research reports further confirm that the visibility of AI infrastructure demand has extended beyond 2027; NVIDIA’s $3–4 trillion AI infrastructure investment blueprint for 2030 is also mutually confirmed by orders and guidance from cloud providers and hardware vendors, highlighting the continuous expansion of the entire ecosystem.

The $100 Billion Alignment

In September 2025, NVIDIA announced plans to invest up to $100 billion in OpenAI to jointly build at least 10GW of AI data centers. This collaboration represents the largest single AI investment to date and also reveals the deeper competitive logic within the compute infrastructure industry.

From the cooperation details, the investment demonstrates a high degree of strategic planning and phased execution. According to the agreement, funding will be tied to the deployment progress of the data centers: the first phase, a 1GW system, is scheduled to go online in the second half of 2026, corresponding to an initial $10 billion investment; subsequent funding will be gradually released as GPU utilization increases.

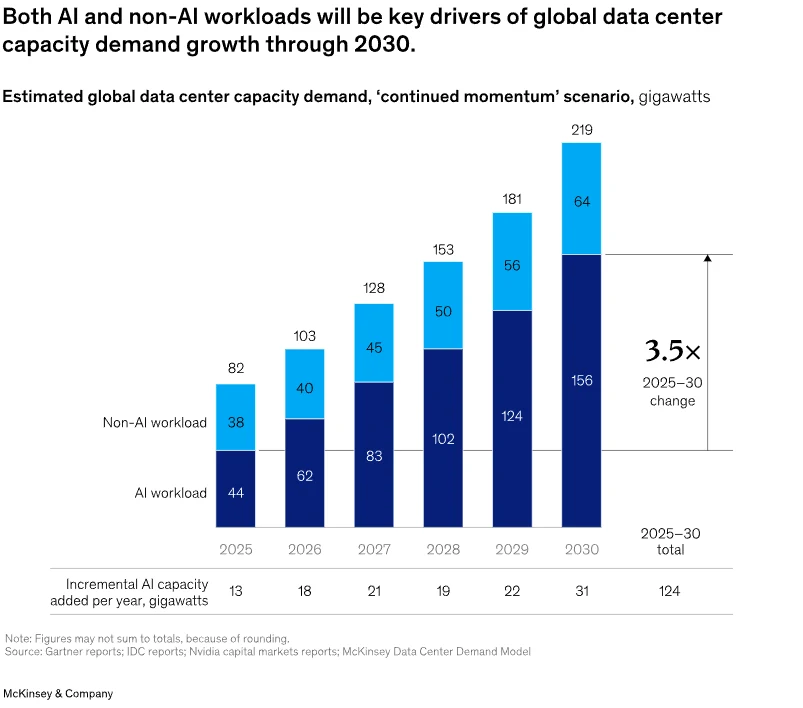

The 10GW data center scale sets a benchmark in the industry—McKinsey data shows that global AI workload power demand in 2025 is only 44GW, meaning this project will account for nearly one-quarter of global AI compute resources. From a cost structure perspective, building a 1GW data center requires $50–60 billion, with $35 billion allocated to NVIDIA chips and systems, implying approximately $350 billion in direct revenue for NVIDIA from the entire project.

Source: McKinsey

For both parties, the collaboration achieves clear value complementarities.

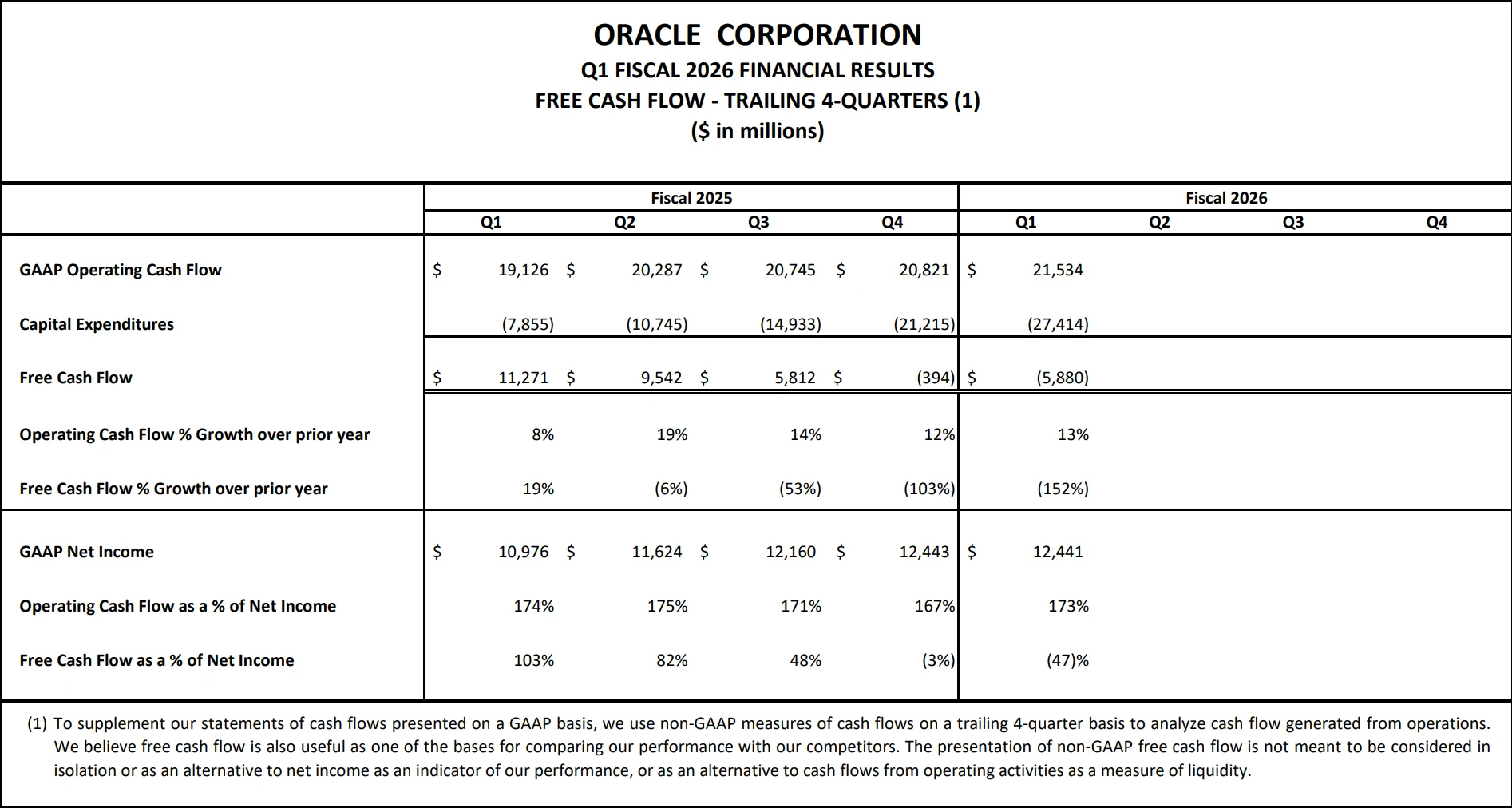

For OpenAI, the investment alleviates development bottlenecks—compute shortages and financial pressure. In addition to NVIDIA’s $100 billion injection, OpenAI has signed a five-year $300 billion compute purchase contract with Oracle and plans to invest another $100 billion in leasing backup servers to support next-generation model development.

For NVIDIA, the deal accomplishes the goals of “customer lock-in + standard setting + ecosystem consolidation”: by securing OpenAI as an exclusive customer, participating deeply in the formulation of large model training technical standards, and leveraging joint R&D to optimize hardware-software synergy, NVIDIA further consolidates its monopoly position in the AI chip market.

Notably, this collaboration reflects the deeper logic of industry chain restructuring. Previously, OpenAI primarily obtained compute through Microsoft, which first had to purchase NVIDIA hardware before providing cloud services. This deal bypasses the intermediary, directly linking compute supply and demand, creating a “hardware manufacturer + AI R&D leader” deep integration model that may become a new industry paradigm.

At the same time, NVIDIA concurrently invested $5 billion in Intel to jointly develop AI infrastructure and personal computing products, strategically countering AMD and further completing its full-stack layout from cloud training to edge inference, laying the foundation for its long-term market advantage.

The Bubble Debate

Jensen Huang’s optimistic forecasts stand in stark contrast to the market’s cautious sentiment, reflecting fundamentally different perceptions of the AI industry’s development logic.

The core arguments behind the “bubble” thesis center on three main points. First is the mismatch between valuation and profitability: OpenAI is projected to incur losses exceeding $5 billion in 2025, with R&D spending approaching 50% of revenue—far above the typical 10%-20% seen at tech giants like Amazon and Microsoft, and even higher than Meta’s roughly 25%. Second is the precocity of infrastructure development: Mark Zuckerberg has likened the current AI infrastructure frenzy to the overinvestment seen during the internet bubble, suggesting that large amounts of compute capacity may remain idle. Third is the indiscriminate allocation of capital: Sam Altman has warned that excessive funding is flowing into AI ventures with unproven technology, many of which lack a clear path to commercialization.

In contrast, Huang offers a rebuttal framed through the lens of a “generational revolution.” He argues that skeptics conflate “short-term hype” with “long-term trends,” whereas AI represents a true generational shift in how industries operate. The fundamental driver, according to Huang, is not speculative capital but the rigid growth in compute demand.

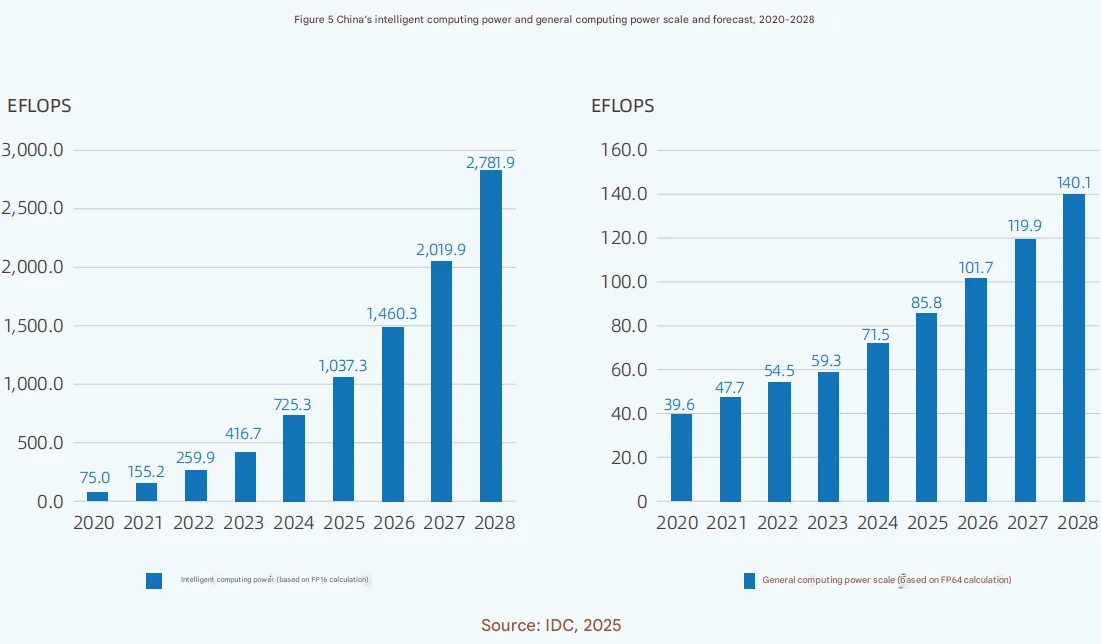

Data supports this perspective: China’s AI compute capacity is expected to reach 1,037.3 EFLOPS in 2025 and 2,781.9 EFLOPS by 2028, with five-year compound annual growth rates (2023–2028) of 46.2% for AI-specific compute and 18.8% for general-purpose compute. Globally, NVIDIA’s data center revenue is projected to reach $173 billion in 2025, more than triple the $42 billion reported in 2023.

Source: China AI Computing Power Development Assessment Report

Further, a CICC research report notes that as AI commercialization starts generating measurable returns and cloud providers must increase compute investments to maintain a leading position, the short- to mid-term share of capital expenditure for cloud providers will not be a limiting factor—compute demand remains highly predictable.

Ultimately, the divergence between these views comes down to the time horizon: the bubble thesis focuses on short-term profitability and valuation, while Huang’s team emphasizes the long-term value creation of a technological revolution. Historical experience shows that after the internet bubble burst, companies with genuine technological barriers and practical applications eventually saw value restoration, as exemplified by Cisco and Amazon. This provides context for why Huang feels confident making contrarian bets amid widespread market caution.

Strategic Framework

Jensen Huang’s forecasts and NVIDIA’s strategic investments provide investors with a clear framework for understanding the AI industry value chain. We believe the core logic revolves around two main threads: the explosion in compute demand and continuous technological iteration.

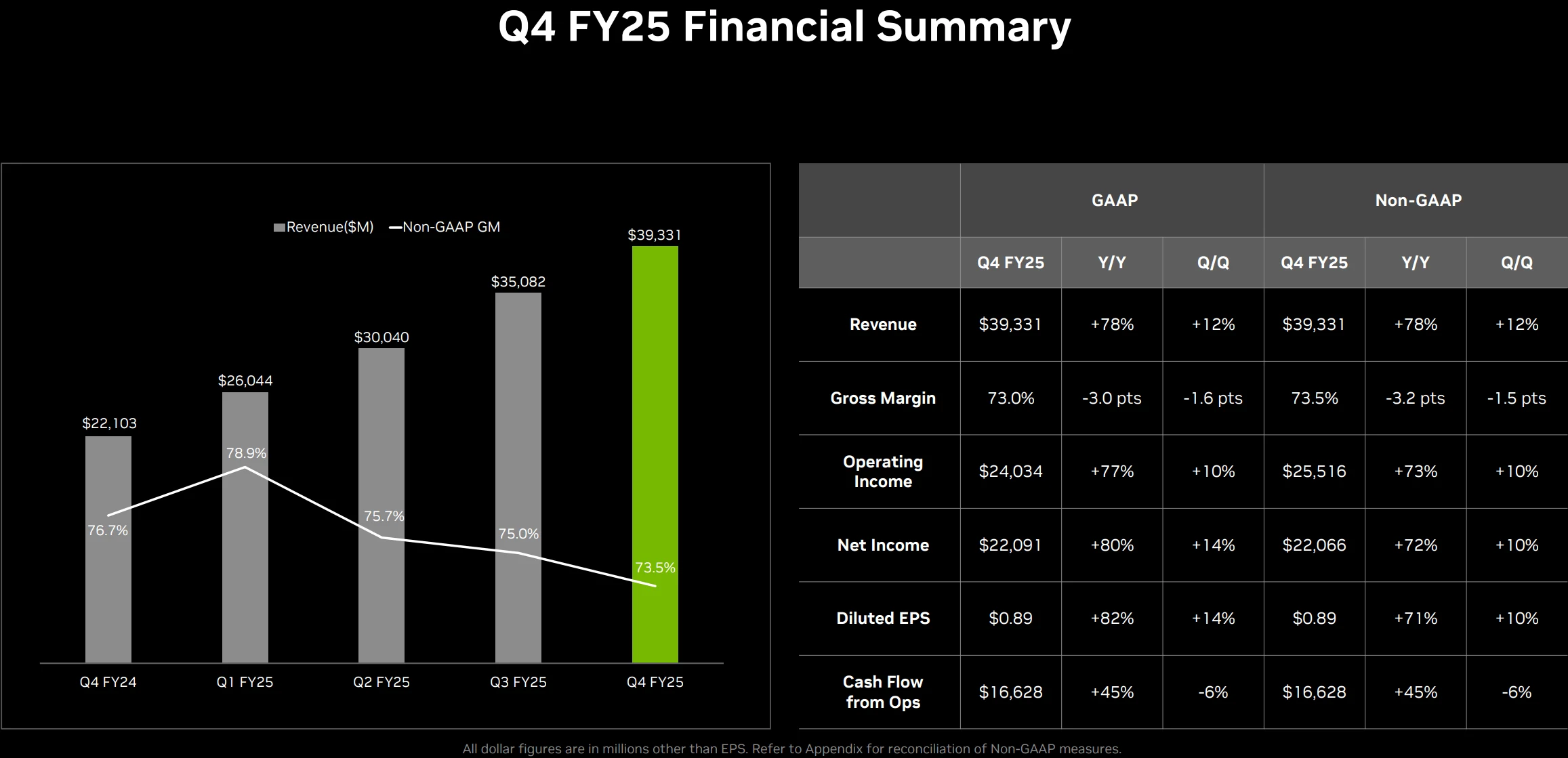

Compute Infrastructure

In the compute hardware segment, NVIDIA is the primary beneficiary. Its data center business is projected to generate $115.2 billion in revenue for FY2025. The global AI chip market is expected to surpass $207 billion in 2025, and with a market share exceeding 90%, NVIDIA is positioned to continue capturing substantial industry upside.

Source: NVIDIA

Data center construction and operations are also entering a period of rapid expansion. Building 10GW-class data centers involves multiple components, including civil works, power supply, and cooling systems. Specialized operators such as Equinix and Digital Realty are already scaling up, with occupancy rates for AI-specific data centers typically exceeding 80%. In terms of cost structure, power and cooling systems account for roughly 30% of each GW of data center construction, offering strong revenue leverage for equipment suppliers.

Inference Computing

Inference chips and optimization services have emerged as the new competitive frontier. The launch of NVIDIA’s Rubin CPX GPU marks a shift toward professional-grade inference hardware. Its design, optimized for long-context inference handling millions of tokens, offers significant advantages in financial research, code generation, and other applications. Citigroup projects that this product could more than triple NVIDIA’s inference-related revenue. At the software level, companies providing inference optimization solutions are also well-positioned to benefit.

The edge inference device market holds substantial potential. As the vision of “one dedicated GPU per individual” gradually materializes, demand for terminal-side inference is accelerating. Intel and NVIDIA’s collaboration on the “Intel X86 with RTX” chip, integrating RTX GPUs for personal computing AI acceleration, will directly benefit associated device manufacturers and chip packaging firms.

AI Software and Services

The financing and commercialization progress of leading AI companies remain critical indicators. Although OpenAI is not publicly listed, its valuation has risen rapidly alongside revenue growth. Following a new funding round in 2025, its valuation is expected to surpass $500 billion, making it a key potential investment target if it goes public. Second-tier companies such as Anthropic and xAI also show promise. Notably, xAI plans to train its Grok3 model using 100,000 H100 GPUs—five times GPT-4’s compute requirement—and has secured 16,000 H100 GPUs from Oracle.

Vertical industry application companies are also poised for a valuation re-rating. In finance, AI inference is expanding from peripheral areas like customer service into core functions such as risk control and investment research; Citigroup forecasts AI could add $170 billion in profits to the banking sector by 2028, a roughly 9% increase. In healthcare, AI inference demand for imaging diagnostics and drug development continues to grow, with order growth exceeding 30%. In manufacturing, real-time inference requirements for industrial robotics are driving edge compute deployment, favoring companies that integrate hardware and algorithm capabilities.

Ecosystem-Centric Enterprises

Companies deeply integrated with leading AI players are likely to capture outsized returns. For instance, Microsoft, an early investor in OpenAI, leverages Azure cloud services to integrate with OpenAI technologies; its Azure AI business is projected to generate $10.4 billion in revenue in FY2025. Oracle, through its $300 billion compute contract with OpenAI, saw OCI cloud revenue jump 54% YoY to $3.3 billion.

Source: Oracle

Conclusion

However, Wall Street remains divided on Jensen Huang’s predictions. While NVIDIA’s financial results do validate his understanding of the AI industry, analysts are concerned that excessive reliance on a single company could pose systemic risks across the sector. Mortonson from Beya warned, “If OpenAI fails, the impact will extend beyond the technology industry.”

Whether Huang’s multibillion-dollar bet pays off depends not only on OpenAI’s technological progress but also on the broader AI industry’s ability to navigate challenges such as power shortages, capital constraints, and improvements in model efficiency.

Disclaimer: The content of this article does not constitute a recommendation or investment advice for any financial products.

Email Subscription

Subscribe to our email service to receive the latest updates