What Nvidia’s $5 Billion Strategic Stake in Intel Means for the Chip Industry

23:14 September 18, 2025 EDT

On September 18, 2025, Nvidia announced a strategic $5 billion equity investment in Intel Corporation, acquiring common shares at $23.28 per share. Upon completion of the transaction, Nvidia will hold approximately 4% of Intel's outstanding shares, positioning it as a significant minority stakeholder.

This investment represents the latest capital infusion in Intel's recent series of financing initiatives. The chipmaker had previously secured $5.7 billion in U.S. government funding and a $2 billion investment from Japan's SoftBank Group. The announcement triggered a 33% pre-market surge in Intel's share price, with the stock ultimately closing 22.8% higher—marking its largest single-day gain since October 1987.

Source: TradingView

Intel has faced persistent revenue headwinds and manufacturing capability constraints in recent years, while Nvidia—despite its dominant position in AI chips—confronts growing risks associated with supply chain dependencies and evolving market constraints. This strategic collaboration represents a calculated maneuver by both corporations to address their respective challenges, while simultaneously reshaping competitive dynamics and supply chain structures across the semiconductor industry. This historic partnership between two chip industry giants potentially signals a fundamental restructuring of computing architecture in the emerging AI era.

Intel's 'Lifeline' and Nvidia's 'Strategic Expansion'

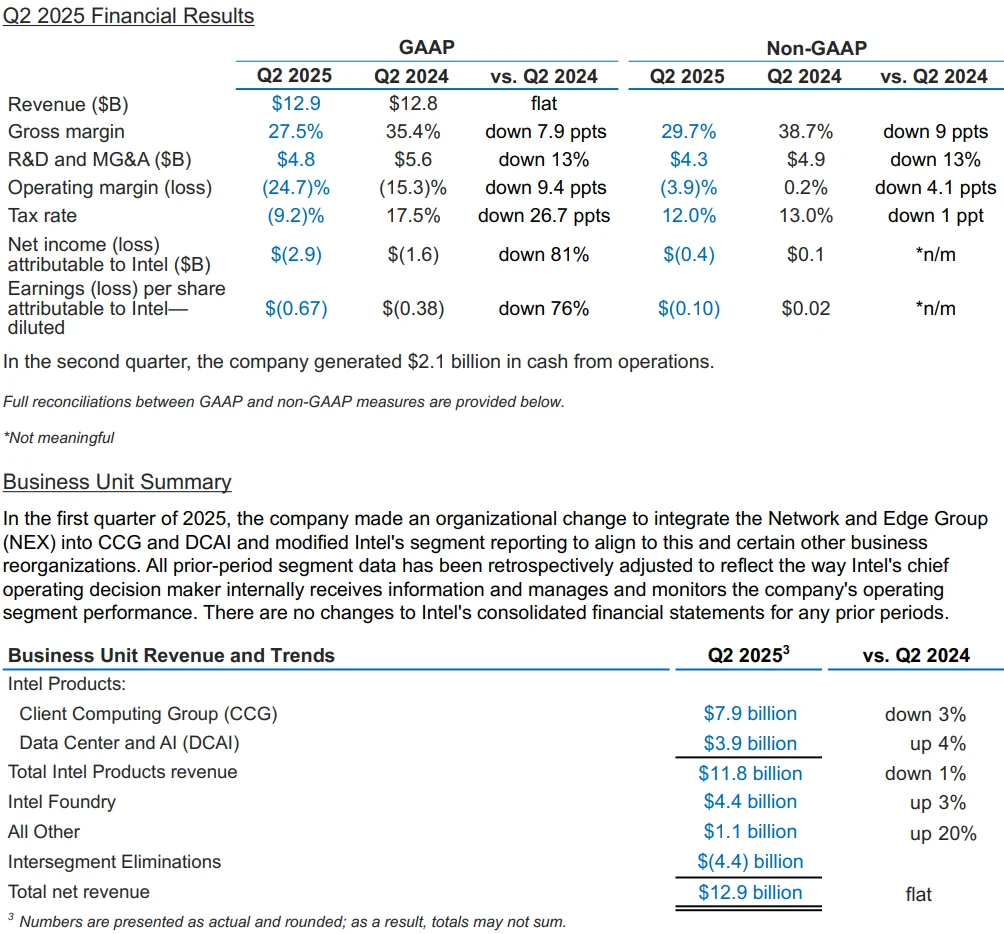

It is well recognized that Intel's challenges extend beyond short-term fluctuations, reflecting instead a deeper structural crisis stemming from its delayed business model transformation and eroding core technological competitiveness. The Q2 2025 earnings report revealed revenue of $12.9 billion alongside a net loss of $2.9 billion. Even its much-anticipated foundry business, while generating $4.4 billion in revenue, recorded an operating loss of $3.17 billion.

Source: Intel

This paradox of increasing revenue alongside mounting losses is partly attributable to Intel's manufacturing capabilities lagging behind those of TSMC. Intel's 3nm process yield stands at approximately 65%, compared to TSMC's consistent yield exceeding 90% for the same node. This disparity directly results in higher chip production costs for Intel, putting the company at a competitive disadvantage and leading to continued market share erosion.

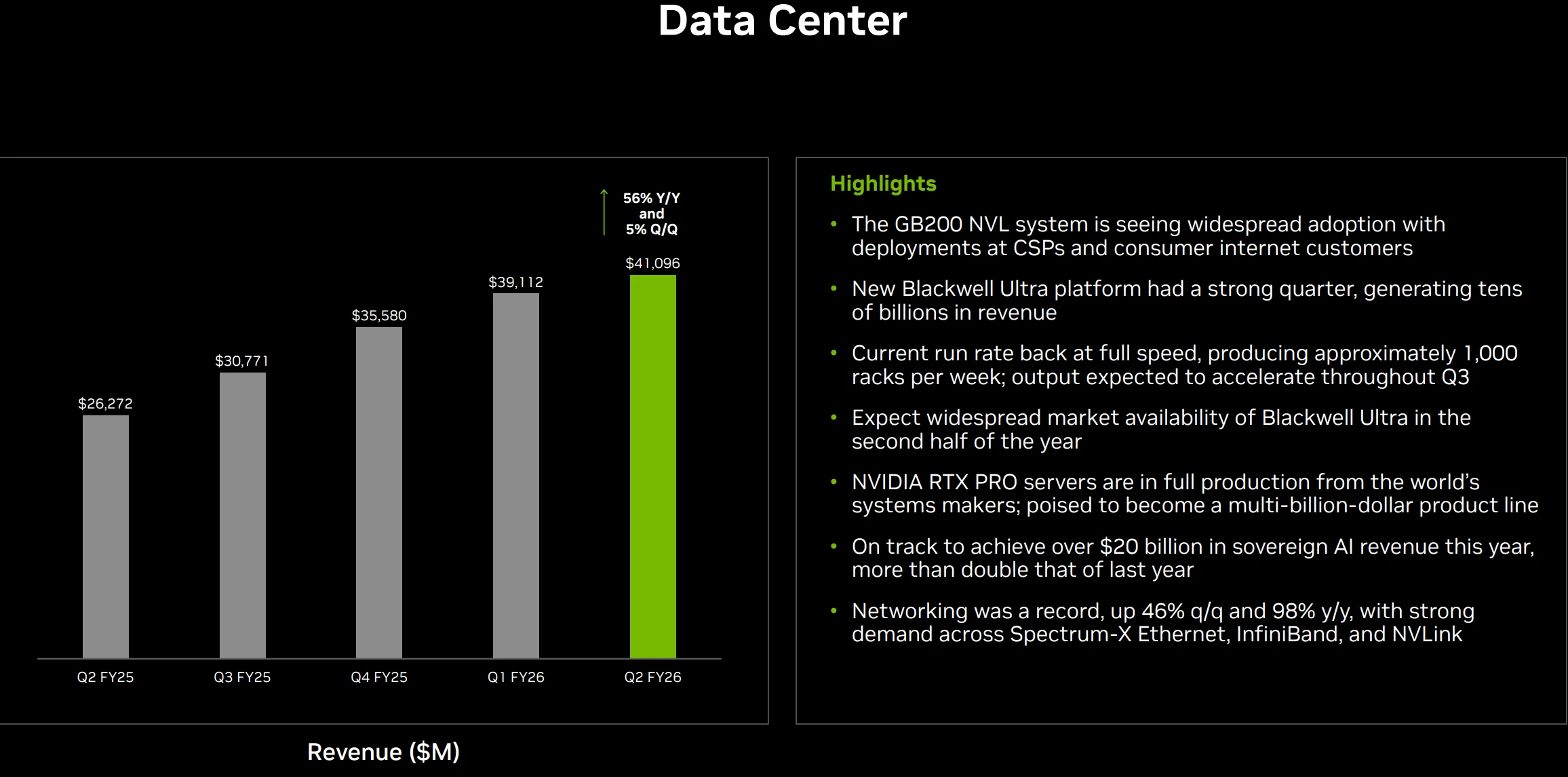

In the core x86 market, Intel's dominance is being steadily eroded by AMD. Q2 2025 saw AMD's server CPU revenue share climb to 41%, a 7.2 percentage point increase year-over-year, continuing its multi-year growth trajectory. AMD's unit share in the server CPU market also expanded to 27.3%. Financial reports show AMD's Q2 revenue reached $7.685 billion, a 31.71% year-over-year increase, with net income attributable to shareholders surging 229.06% to $872 million.

Source: AMD

Critically, while Intel maintains some presence in AI acceleration, its proprietary GPU technology and software ecosystem significantly trail Nvidia in both market influence and technical maturity—particularly as data centers increasingly demand integrated CPU+GPU solutions.

In the AI training chip market, for instance, Nvidia commands 98% market share compared to Intel's sub-1% presence. Similarly, Nvidia captures over 90% of the data center AI server market, while Intel's share continues to decline. Technologically, Intel's Gaudi series AI accelerators underperform compared to Nvidia's H100/H200 products, undermining their competitive positioning and customer appeal.

The $5 billion infusion from Nvidia provides Intel with capital to alleviate pressure on its Ohio fab construction projects. More importantly, it enables Intel to leverage Nvidia's AI computing capabilities and software ecosystem to better meet market demands for AI solutions.

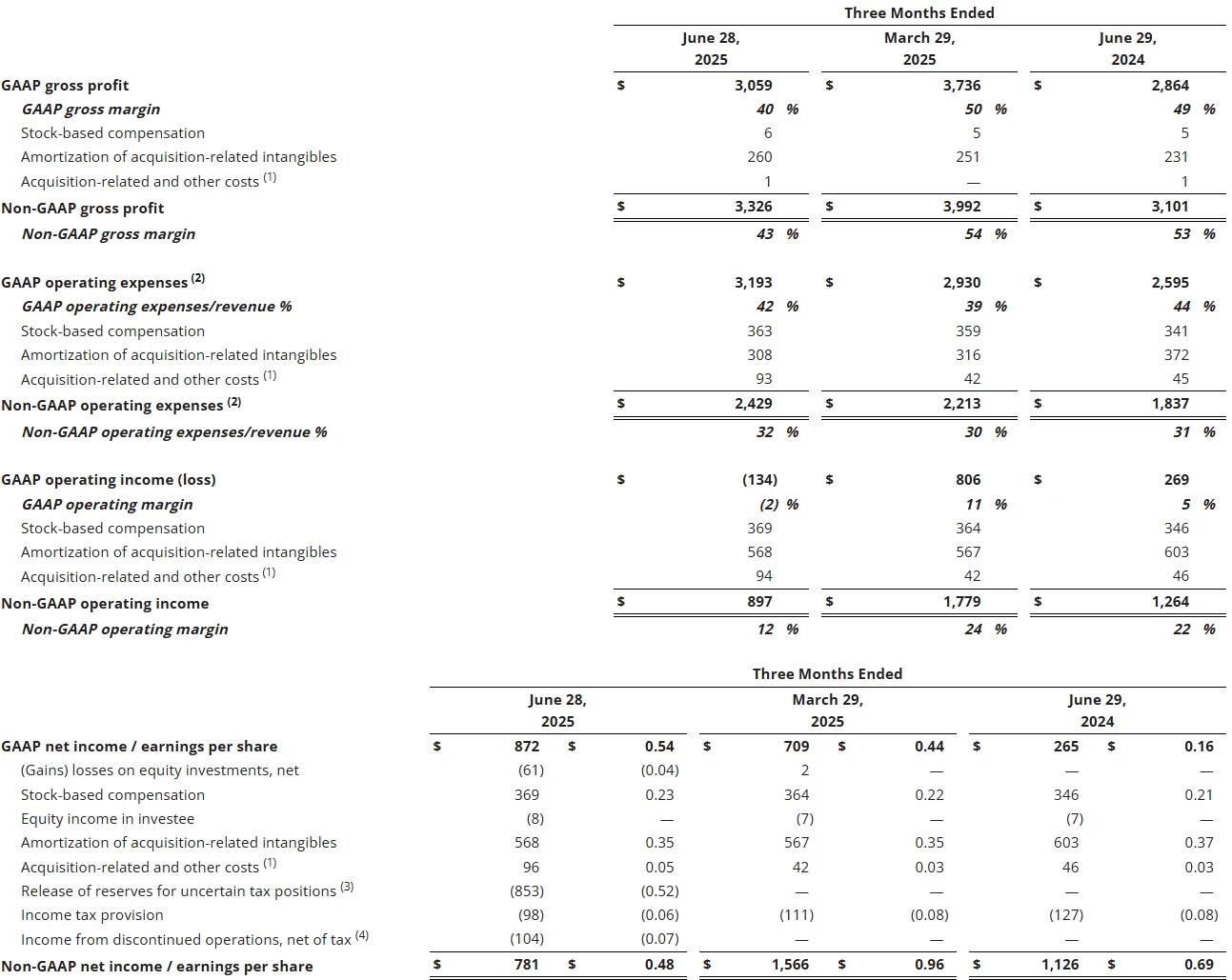

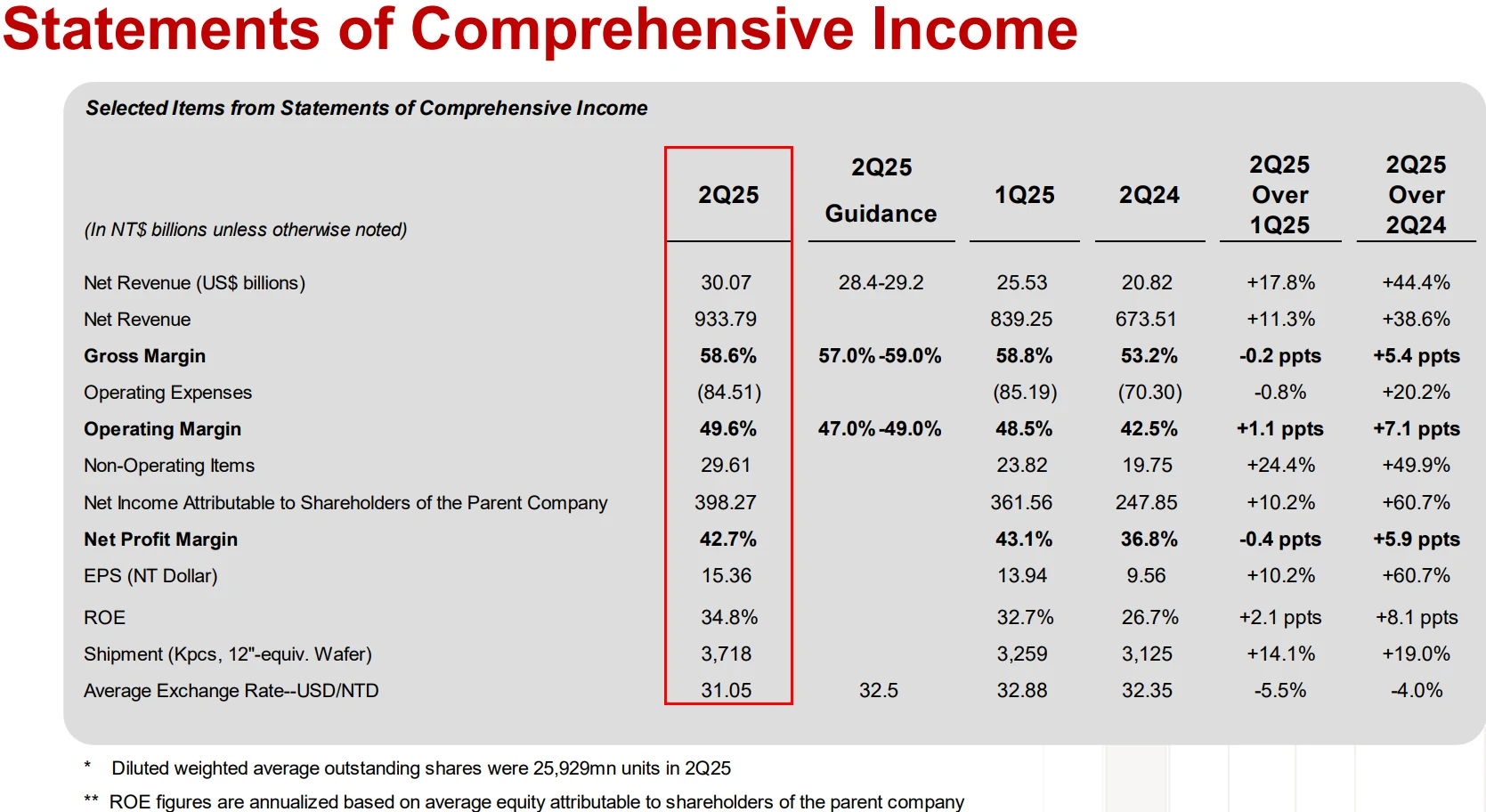

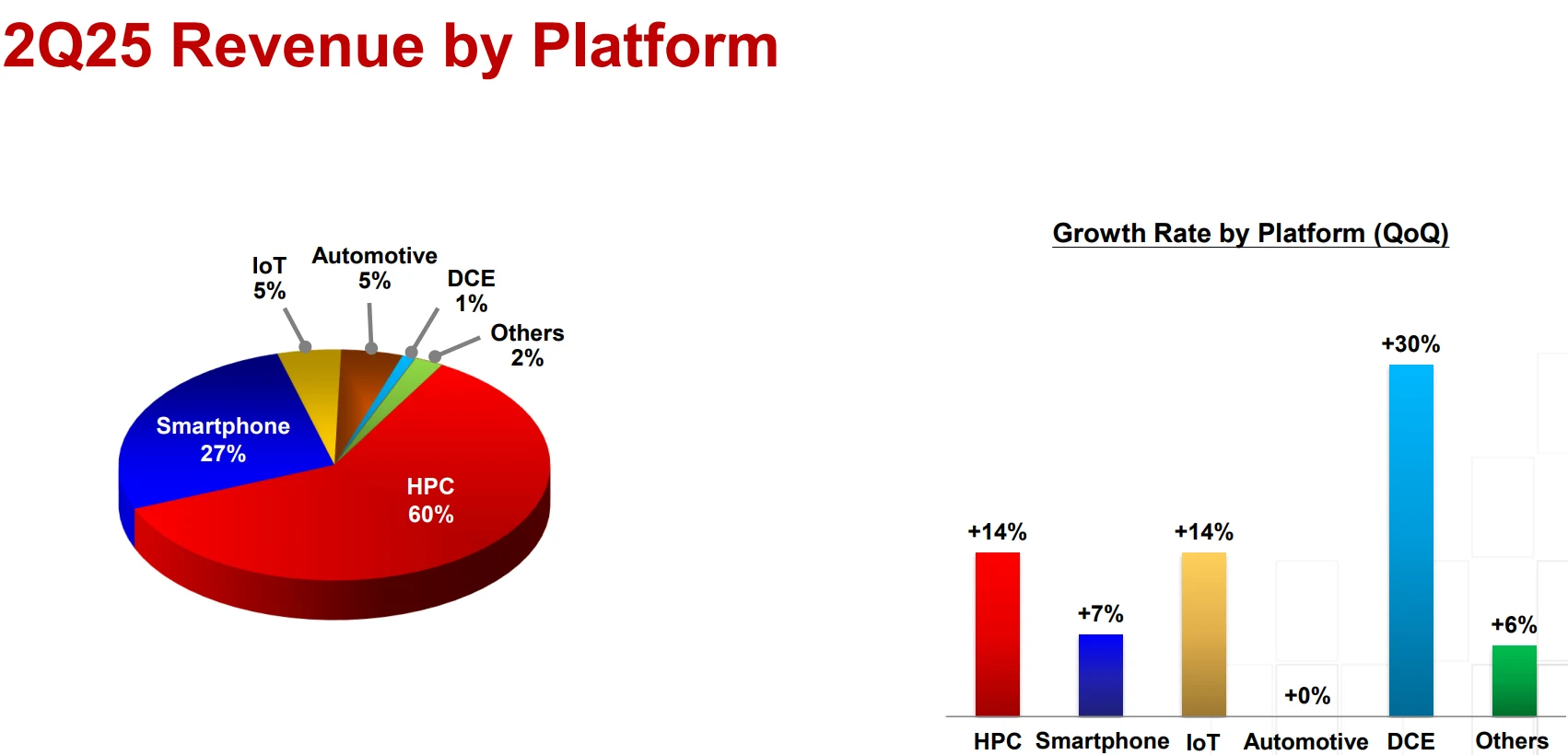

In contrast to Intel's reactive transformation, Nvidia's motivations reflect proactive ecosystem expansion and risk diversification. Despite reporting $41.1 billion in data center revenue for Q2 FY2026 and holding 95% share of the global AI training chip market, Nvidia faces two critical challenges: First, manufacturing overreliance on TSMC, whose advanced 3nm capacity remains perpetually constrained and subject to U.S. export controls limiting Chinese market access. Second, market expansion limitations—while the AI training chip market is substantial, significant opportunities remain untapped in mid-to-low-end AI servers and PC AI computing, traditionally Intel's strongholds.

Source: Nvidia

The collaboration delivers three strategic benefits for Nvidia: First, leveraging Intel's extensive x86 ecosystem to evolve its AI infrastructure offerings from standalone GPUs to integrated "CPU+GPU+networking" compute nodes, strengthening its grip on data center customers. Second, utilizing Intel's PC market channels to embed GPU technology into high-performance laptops and workstations—a potential $25-50 billion market opportunity that Nvidia has previously underutilized, as acknowledged by CEO Jensen Huang. Third, diversifying supply chain risks—Intel's advanced packaging technologies could potentially substitute some of TSMC's system integration services, and should Intel's foundry capabilities improve, it might even contract manufacture certain mid-to-low-end GPUs, reducing Nvidia's TSMC dependence.

From an industry perspective, this partnership represents an inevitable evolution in heterogeneous computing trends. As AI and high-performance computing demands explode, general-purpose CPU computing alone can no longer meet efficiency requirements, making "general computing (CPU) + specialized acceleration (GPU/AI chips)" the mainstream architecture. Industry data indicates that 75% of global high-performance computing scenarios adopted heterogeneous architectures in 2025, predominantly requiring x86 CPU and Nvidia GPU coordination.

Previously, Nvidia-Intel collaboration remained limited to conventional vendor-customer relationships without deep integration, resulting in CPU-GPU data transfer efficiency being constrained by PCIe bus limitations. Under the new agreement, Intel will develop custom x86 CPUs for Nvidia and launch x86 SoCs integrating Nvidia RTX GPUs, implementing NVLink technology for CPU-GPU communication. Compared to traditional PCIe designs, NVLink delivers substantially higher bandwidth and lower latency, significantly enhancing computational efficiency. This technological integration essentially represents how industry demands are fundamentally reshaping corporate collaboration models.

The Convergence of Technology, Market, and Capital

At its core, this collaboration far transcends a simple "Intel provides CPUs, Nvidia provides GPUs" arrangement, potentially representing a deep integration spanning from hardware architecture to software ecosystems. In the data center sector, the x86 CPUs Intel is customizing for Nvidia are unlikely to be standard off-the-shelf models, but rather versions specifically optimized for AI computing—potentially featuring simplified redundant instruction sets and enhanced GPU interconnection interfaces capable of direct connectivity with Nvidia GPUs via NVLink 4.0. Such optimizations would minimize data transfer overhead between CPUs and GPUs, particularly beneficial for the massive data exchanges required in large model training.

In the PC domain, the collaborative x86 RTX SoC breaks from conventional integration approaches. While AMD's APUs integrate CPU and GPU cores on a single chip—with performance constrained by process technology and power limitations—the Intel-Nvidia solution likely employs advanced packaging technologies to seamlessly combine discrete x86 CPU cores with RTX GPU cores. This approach maintains both components' high performance while enabling low-latency coordination. At the software level, Nvidia's CUDA ecosystem will be optimized for this SoC, ensuring games and design software can fully leverage GPU computing power—an advantage AMD's Radeon GPU cannot match in the near term.

Nvidia CEO Jensen Huang stated: "AI is driving a new industrial revolution, reshaping the entire computing landscape from chips to systems to software." He emphasized that this "historic collaboration" tightly integrates Nvidia's AI and accelerated computing technologies with Intel's CPUs and extensive x86 ecosystem, representing a fusion of "two world-class platforms."

Intel CEO Lip-Bu Tan responded: "Intel's x86 architecture has been the foundation of modern computing for decades, and we continue to innovate our product portfolio to address future workloads." He believes Intel's leadership in data center and client computing platforms, combined with its process technology, manufacturing capabilities, and advanced packaging expertise, will complement Nvidia's dominance in AI and accelerated computing.

This technical synergy will ultimately manifest in market competition, with the collaboration's impact on industry dynamics initially evident in its pressure on competitors. For AMD, its long-relied "CPU+GPU" integration advantage may be diminished: while AMD's APUs maintain cost-performance benefits, they significantly trail Nvidia in AI computing power and software ecosystem (particularly CUDA's support for professional design software). With Intel's x86 CPU performance already comparable to AMD's, their combined solution could establish performance and ecosystem dominance in AMD's strongholds like high-performance laptops and edge computing.

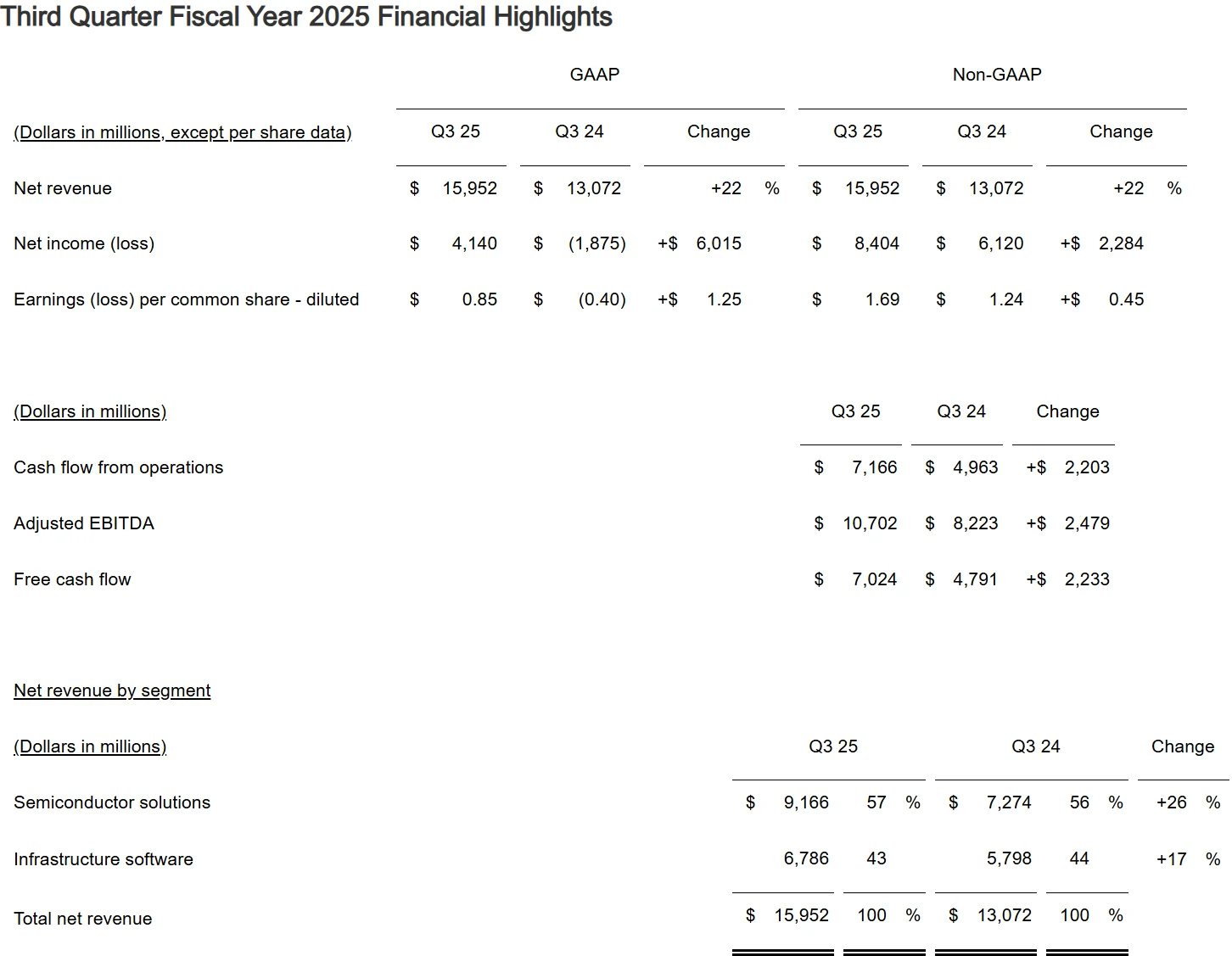

Beyond AMD, companies like Broadcom specializing in custom chips and interconnection technologies are expected to face pressure. Broadcom reported $5.2 billion in AI-related revenue in Q3 FY2025, primarily from providing inter-chip connectivity solutions and custom AI chip design services for companies like Google and Meta.

Source: Broadcom

However, with the Intel-Nvidia collaboration, data center clients can directly adopt integrated "x86 CPU + Nvidia GPU" solutions without requiring third-party interconnection technologies. Simultaneously, Nvidia's custom GPU capabilities will divert custom chip orders from Broadcom, particularly in the mid-to-low-end AI server segment.

Notably, Nvidia's share subscription price of $23.28 per share represents a 6.5% discount to the previous day's closing price, resulting in approximately 4% ownership and positioning Nvidia among Intel's top five shareholders. This stake level provides Nvidia with sufficient influence over the collaboration without triggering control disputes, preventing the partnership from becoming a one-sided domination arrangement.

More importantly, this equity alignment creates space for deeper future collaboration. Although Huang explicitly stated that current cooperation focuses on product development without involving Intel's foundry business, he noted that Nvidia is "continuously evaluating Intel's foundry technology"—implying that if Intel's 3nm and 2nm process yields meet standards, future production of some mid-to-low-end GPUs by Intel isn't ruled out. For Intel, Nvidia's investment also signals market confidence, potentially attracting more external clients to its foundry services and alleviating pressure on its struggling contract manufacturing business.

Restructuring of the Industry Chain

A central concern in the market is whether this collaboration will impact TSMC's dominant position. In the short term, TSMC's advantages remain overwhelming. Analysts including Ming-Chi Kuo note that TSMC's leadership in advanced process nodes (3nm and below), measured by yield rates and cost control, will likely persist until at least 2030. Nvidia's flagship GPUs (such as the Blackwell B100) demand extremely advanced manufacturing processes that only TSMC can currently provide. Meanwhile, Intel's foundry business still primarily serves internal orders and lacks external customer validation, making it unable to handle Nvidia's high-end chip orders in the near term.

Source: TSMC

Although Intel is aggressively advancing its IDM 2.0 strategy and accelerating capacity expansion, its foundry technology and production capabilities cannot meet the requirements for manufacturing Nvidia's high-end chips in the short term. For now, TSMC's position as the primary foundry partner for Nvidia's flagship processors remains unshaken.

However, in the long term, TSMC must guard against the risk of losing mid-to-low-end orders. As Intel's packaging technology and mature process yields improve, Nvidia may shift production of certain mid-to-low-end GPUs and AI inference chips to Intel to reduce costs and diversify supply chain risks.

For TSMC, this means further consolidating its advantages in advanced processes while expanding into new areas such as automotive and high-performance computing (HPC) to avoid overreliance on single customers like Nvidia. According to TSMC's development strategy, the company is actively diversifying into segments like automotive electronics—which accounted for 5% of its Q2 2025 revenue—with plans to increase this proportion to 15% by 2028.

Source: TSMC

At a deeper level, this collaboration may signal a shift in the competitive logic of the semiconductor industry: from vertically integrated models where a single company covers the entire chain from design to manufacturing and packaging, toward collaborative models where multiple firms form ecosystem alliances based on core strengths.

Previously, industry leaders often pursued full-chain control—Intel insisted on an integrated "design + manufacturing" approach, while Samsung attempted to cover everything from memory to logic chips. However, as technological complexity increases and geopolitical risks escalate, it has become difficult for any single company to maintain leadership across all segments. The NVIDIA-Intel collaboration may demonstrate that alliances formed through complementary strengths can respond more effectively to market demands.

This model could proliferate across the industry. For example, AMD might partner with Samsung to leverage its foundry capabilities and reduce reliance on TSMC; Qualcomm and MediaTek could collaborate in specific areas to counter Apple's in-house chip development. The industry may transition from a zero-sum game to limited coopetition, where the strength of ecosystem alliances becomes the core differentiator.

In the coming years, competition in the semiconductor industry may no longer be about company versus company, but rather alliance versus alliance. Those who can build more stable and efficient ecosystems will gain the upper hand in the new era of competition.

Disclaimer: The content of this article does not constitute a recommendation or investment advice for any financial products.

Email Subscription

Subscribe to our email service to receive the latest updates